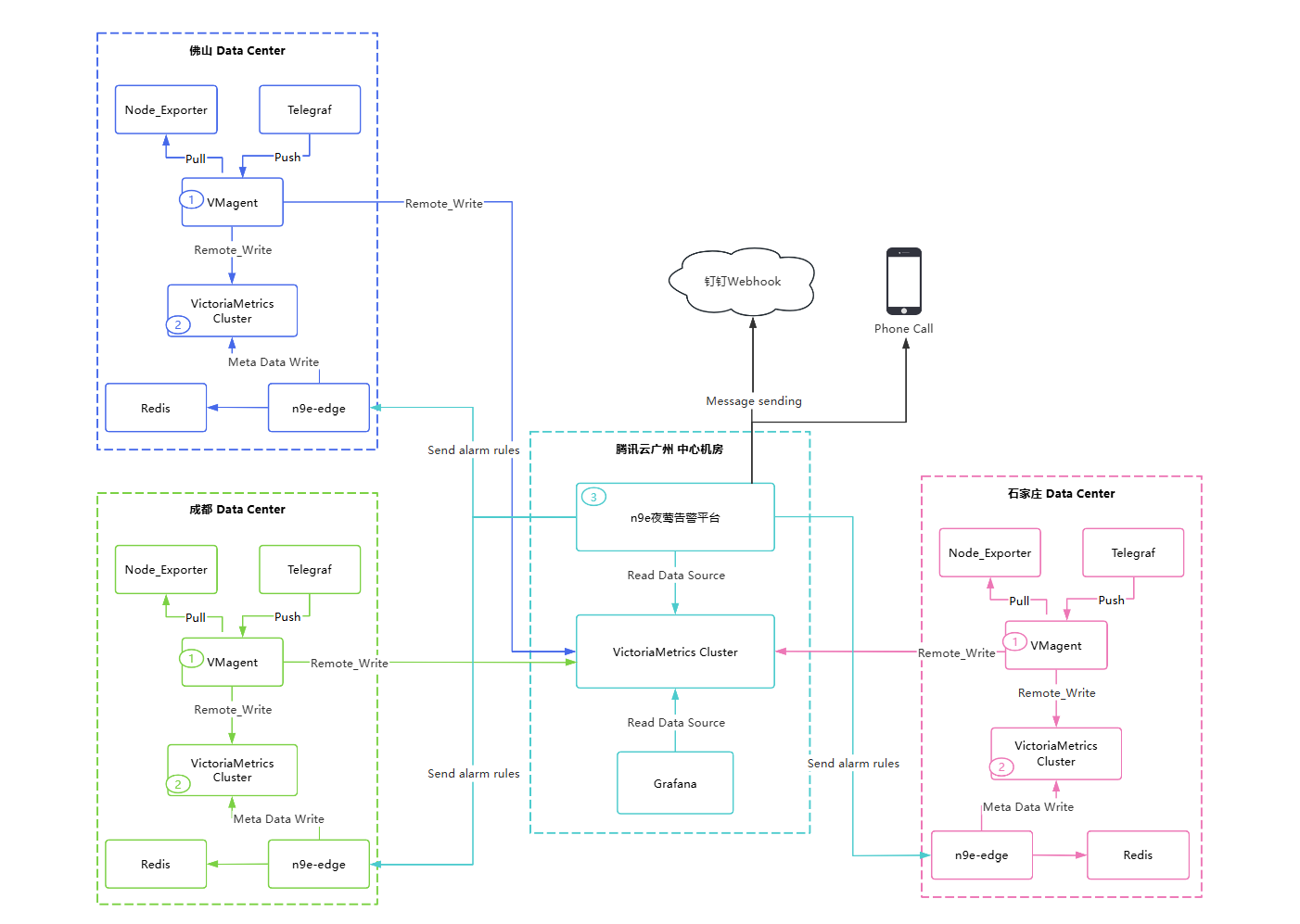

1. 整体架构

1.1 中心机房+边缘机房

1.1 组件高可用

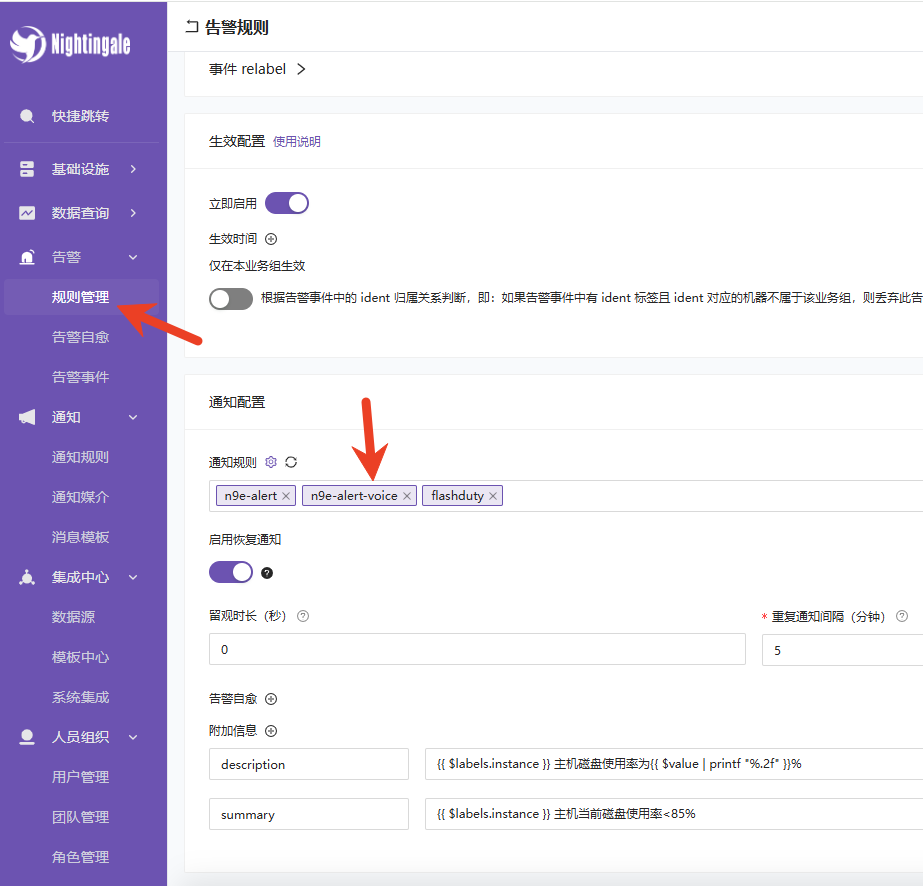

| 组件 | 中心机房 | 边缘机房 |

|---|---|---|

| 夜莺服务 | 3节点K8S集群+负载均衡 | edge+vmagent * 2 |

| 时序数据库 | VictoriaMetrics集群 | VictoriaMetrics集群 * 3 |

| 缓存 | 云Redis | Redis集群 * 3 |

| 关系数据库 | 云MySQL | 不部署 |

| 采集器 | k8s metrics-server | Categraf+Telegraf+Node_exporter |

1.2 边缘自治机制

- 中心机房故障后,由边缘机房的n9e-edge发出告警消息

1.3 数据存储计划

| 数据类型 | 中心机房 | 边缘机房 |

|---|---|---|

| 监控指标 | 长期存储(180天) | 长期存储(180天) |

| 告警事件 | 完整存储 | 仅未同步事件 |

| 告警规则 | 主存储 | 副本 |

| 用户数据 | 主存储 | 缓存 |

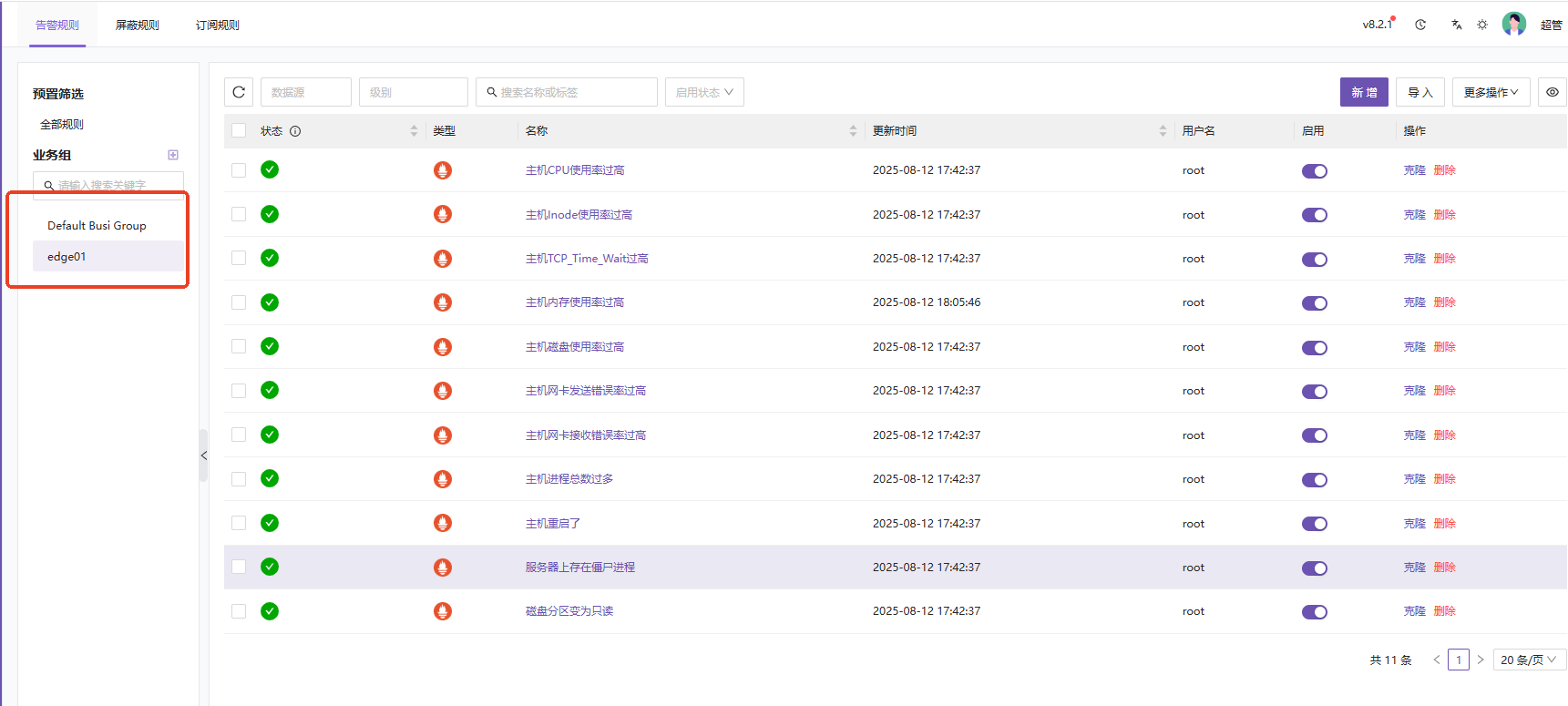

2. 功能配置说明

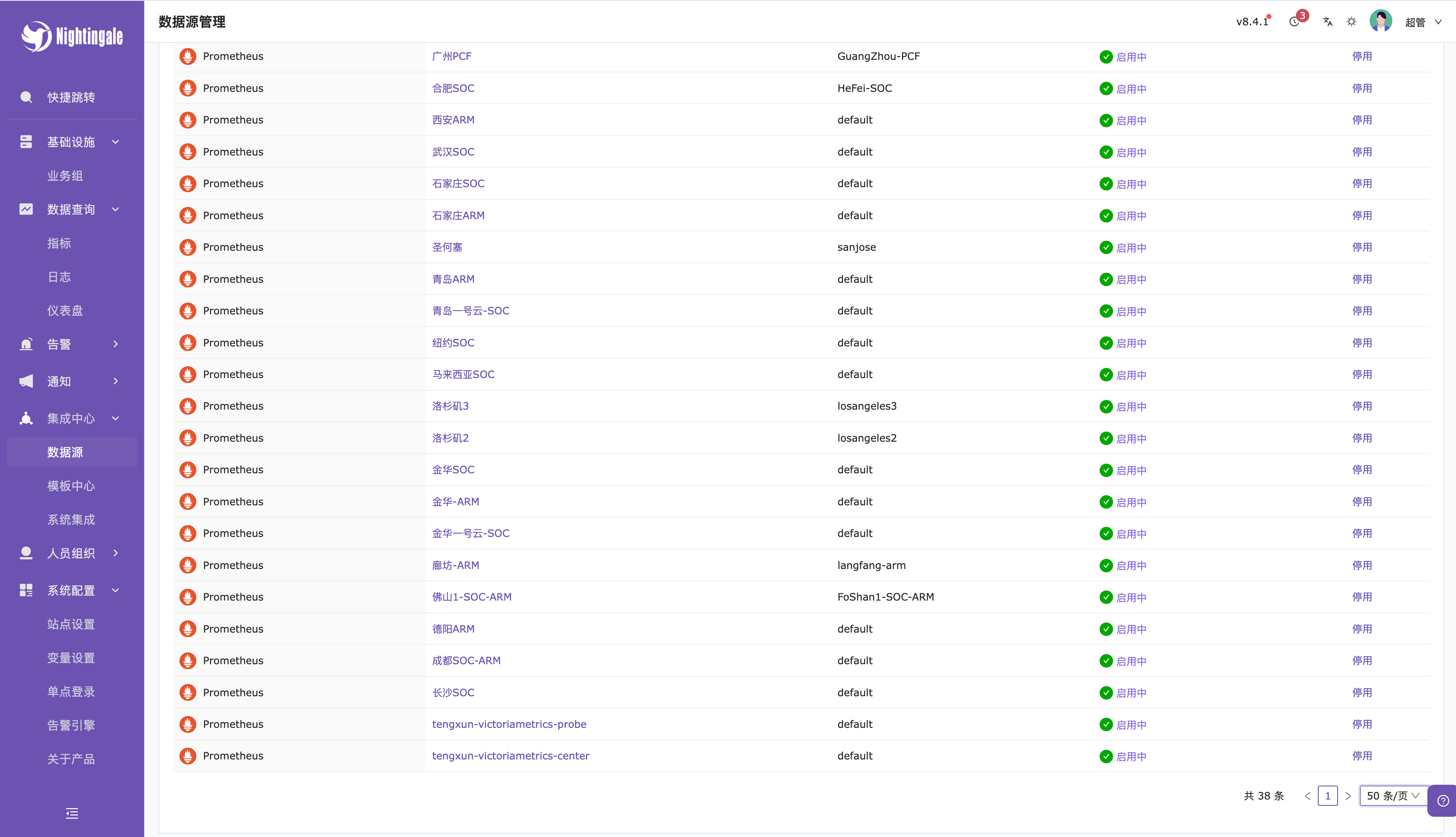

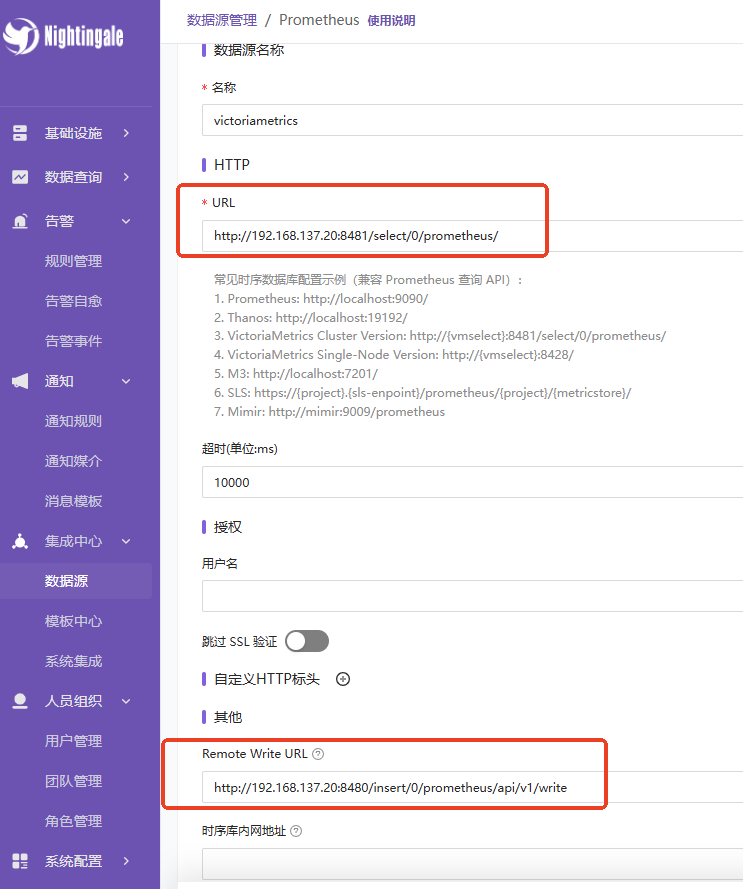

2.1 配置数据源

http://192.168.137.20:8481/select/0/prometheus/

http://192.168.137.20:8480/insert/0/prometheus/api/v1/write

# 时序库选择victoriametrics

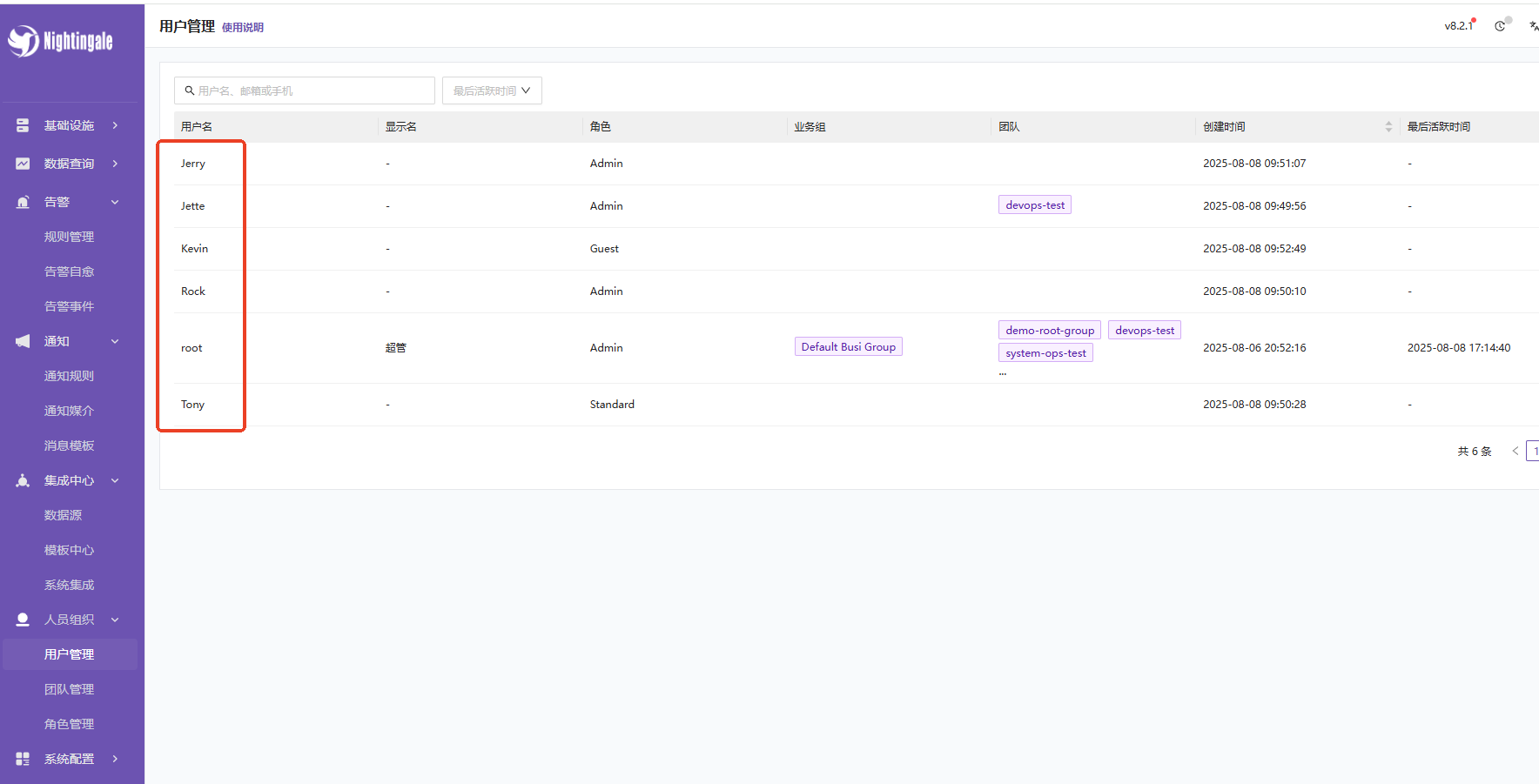

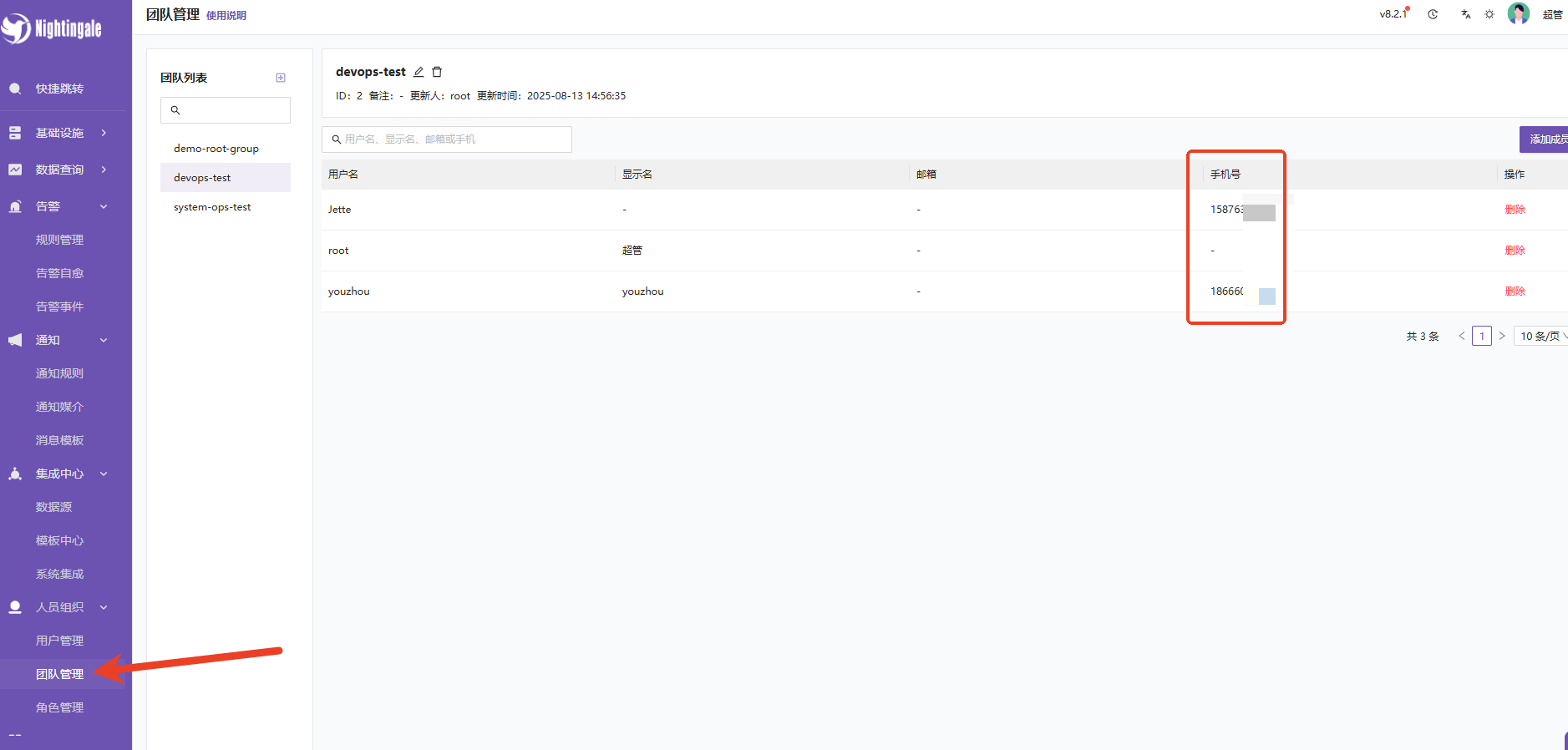

2.2 创建团队/用户,团队可以根据不同部门或业务组区分,方便后续发送告警

2.3 创建用户并填写手机号,后续告警会通过手机号AT对应的人员,将用户分别加入对应的团队组里

2.4 红橙绿字段模板

{{if $event.IsRecovered}}{{else}}{{end}}{{$event.RuleName}}

- - -

{{if $event.IsRecovered}}

<font color='#008800'>**告警名称**:</font> {{$event.AnnotationsJSON.summary}}

<font color='#008800'>**告警区域**:</font> {{if $event.TagsMap.business}}{{$event.TagsMap.business}}{{else}}N/A{{end}}

<font color='#008800'>**告警主机**:</font> {{if $event.TagsMap.instance}}{{$event.TagsMap.instance}}{{else}}N/A{{end}}

<font color='#008800'>**告警项目**:</font> {{if $event.TagsMap.project}}{{$event.TagsMap.project}}{{else}}N/A{{end}}

<font color='#008800'>**告警服务**:</font> {{if $event.TagsMap.service}}{{$event.TagsMap.service}}{{else}}N/A{{end}}

<font color='#008800'>**告警级别**:</font> {{if eq $event.Severity 1}}critical{{else if eq $event.Severity 2}}warning{{else}}info{{end}}

<font color='#008800'>**告警信息**:</font> {{if $event.AnnotationsJSON.description}}{{$event.AnnotationsJSON.description}}{{else}}{{$event.AnnotationsJSON.summary}}{{end}}

<font color='#008800'>**告警时间**:</font> {{timeformat $event.TriggerTime "2006.01.02 15:04:05"}}

<font color='#008800'>**恢复时间**:</font> {{timeformat $event.LastEvalTime "2006.01.02 15:04:05"}}

{{else}}

{{if eq $event.Severity 1}} <!-- Critical -->

<font color='#FF0000'>**告警名称**:</font> {{$event.AnnotationsJSON.summary}}

<font color='#FF0000'>**告警区域**:</font> {{if $event.TagsMap.business}}{{$event.TagsMap.business}}{{else}}N/A{{end}}

<font color='#FF0000'>**告警主机**:</font> {{if $event.TagsMap.instance}}{{$event.TagsMap.instance}}{{else}}N/A{{end}}

<font color='#FF0000'>**告警项目**:</font> {{if $event.TagsMap.project}}{{$event.TagsMap.project}}{{else}}N/A{{end}}

<font color='#FF0000'>**告警服务**:</font> {{if $event.TagsMap.service}}{{$event.TagsMap.service}}{{else}}N/A{{end}}

<font color='#FF0000'>**告警级别**:</font> critical

<font color='#FF0000'>**告警信息**:</font> {{if $event.AnnotationsJSON.description}}{{$event.AnnotationsJSON.description}}{{else}}{{$event.AnnotationsJSON.summary}}{{end}}

<font color='#FF0000'>**告警时间**:</font> {{timeformat $event.TriggerTime "2006.01.02 15:04:05"}}

{{else if eq $event.Severity 2}} <!-- Warning -->

<font color='#FFA500'>**告警名称**:</font> {{$event.AnnotationsJSON.summary}}

<font color='#FFA500'>**告警区域**:</font> {{if $event.TagsMap.business}}{{$event.TagsMap.business}}{{else}}N/A{{end}}

<font color='#FFA500'>**告警主机**:</font> {{if $event.TagsMap.instance}}{{$event.TagsMap.instance}}{{else}}N/A{{end}}

<font color='#FFA500'>**告警项目**:</font> {{if $event.TagsMap.project}}{{$event.TagsMap.project}}{{else}}N/A{{end}}

<font color='#FFA500'>**告警服务**:</font> {{if $event.TagsMap.service}}{{$event.TagsMap.service}}{{else}}N/A{{end}}

<font color='#FFA500'>**告警级别**:</font> warning

<font color='#FFA500'>**告警信息**:</font> {{if $event.AnnotationsJSON.description}}{{$event.AnnotationsJSON.description}}{{else}}{{$event.AnnotationsJSON.summary}}{{end}}

<font color='#FFA500'>**告警时间**:</font> {{timeformat $event.TriggerTime "2006.01.02 15:04:05"}}

{{else}} <!-- Info -->

<font color='#0000FF'>**告警名称**:</font> {{$event.AnnotationsJSON.summary}}

<font color='#0000FF'>**告警区域**:</font> {{if $event.TagsMap.business}}{{$event.TagsMap.business}}{{else}}N/A{{end}}

<font color='#0000FF'>**告警主机**:</font> {{if $event.TagsMap.instance}}{{$event.TagsMap.instance}}{{else}}N/A{{end}}

<font color='#0000FF'>**告警项目**:</font> {{if $event.TagsMap.project}}{{$event.TagsMap.project}}{{else}}N/A{{end}}

<font color='#0000FF'>**告警服务**:</font> {{if $event.TagsMap.service}}{{$event.TagsMap.service}}{{else}}N/A{{end}}

<font color='#0000FF'>**告警级别**:</font> info

<font color='#0000FF'>**告警信息**:</font> {{if $event.AnnotationsJSON.description}}{{$event.AnnotationsJSON.description}}{{else}}{{$event.AnnotationsJSON.summary}}{{end}}

<font color='#0000FF'>**告警时间**:</font> {{timeformat $event.TriggerTime "2006.01.02 15:04:05"}}

{{end}}

{{end}}

2.4.1 告警与恢复效果

2.5 部署categraf采集器,使用自愈功能需部署此采集器

# 下载安装包,里面集成了所有插件

https://github.com/flashcatcloud/categraf/releases

tar zxf categraf-v0.4.14-linux-amd64.tar.gz -C /data/

mv /data/categraf-v0.4.14-linux-amd64 /data/categraf

# 创建主配置文件

cd /data/categraf

vim conf/config.toml

[global]

# 启动的时候是否在stdout中打印配置内容

print_configs = false

# 机器名,作为本机的唯一标识,会为时序数据自动附加一个 agent_hostname=$hostname 的标签

# hostname 配置如果为空,自动取本机的机器名

# hostname 配置如果不为空,就使用用户配置的内容作为hostname

# 用户配置的hostname字符串中,可以包含变量,目前支持两个变量,

# $hostname 和 $ip,如果字符串中出现这两个变量,就会自动替换

# $hostname 自动替换为本机机器名,$ip 自动替换为本机IP

# 建议大家使用 --test 做一下测试,看看输出的内容是否符合预期

# 这里配置的内容,在--test模式下,会显示为 agent_hostname=xxx 的标签

hostname = ""

# 是否忽略主机名的标签,如果设置为true,时序数据中就不会自动附加agent_hostname=$hostname 的标签

omit_hostname = false

# 时序数据的时间戳使用ms还是s,默认是ms,是因为remote write协议使用ms作为时间戳的单位

precision = "ms"

# 全局采集频率,15秒采集一次

interval = 15

# 全局附加标签,一行一个,这些写的标签会自动附到时序数据上

[global.labels]

service="pvenode"

business="SZ"

project="yw"

[log]

# 默认的log输出,到标准输出(stdout)

# 如果指定为文件, 则写入到指定的文件中

file_name = "stdout"

# options below will not be work when file_name is stdout or stderr

# 如果是写入文件,最大写入大小,单位是MB

max_size = 100

# max_age is the maximum number of days to retain old log files based on the timestamp encoded in their filename.

# 保留多少天的日志文件

max_age = 30

# max_backups is the maximum number of old log files to retain.

# 保留多少个日志文件

max_backups = 7

# local_time determines if the time used for formatting the timestamps in backup files is the computer's local time.

# 是否使用本地时间

local_time = true

# Compress determines if the rotated log files should be compressed using gzip.

# 是否将老文件压缩(gzip格式)

compress = true

# 发给后端的时序数据,会先被扔到 categraf 内存队列里,每个采集插件一个队列

# chan_size 定义了队列最大长度

# batch 是每次从队列中取多少条,发送给后端backend

[writer_opt]

# default: 2000

batch = 2000

# channel(as queue) size

chan_size = 10000

# 后端backend配置,在toml中 [[]] 表示数组,所以可以配置多个writer

# 每个writer可以有不同的url,不同的basic auth信息

[[writers]]

# 注意端口号

# v5版本端口是19000

# v6+版本端口是17000

# 写入vmagent,由vmagent聚合重写标签再push到vminset

url = "http://127.0.0.1:8429/api/v1/write"

# Basic auth username

basic_auth_user = ""

# Basic auth password

basic_auth_pass = ""

# timeout settings, unit: ms

timeout = 10000

dial_timeout = 3000

max_idle_conns_per_host = 100

# 是否开启push gateway

[http]

enable = false

address = ":9100"

print_access = false

run_mode = "release"

# 是否启用告警自愈agent

[ibex]

enable = true

## ibex flush interval

interval = "2000ms"

## n9e ibex server rpc address

servers = ["192.168.137.20:20090"]

## temp script dir

meta_dir = "./meta"

# 心跳上报(附带资源信息,对象列表中使用),适用于夜莺v6+

# 如果是v5版本,这里不需要保留

[heartbeat]

enable = true

# 如果心跳携带参数 gid=<group_id> 可以实现自动归属于某个业务组效果

# report os version cpu.util mem.util metadata

url = "http://192.168.137.20:17000/v1/n9e/heartbeat"

# interval, unit: s

interval = 10

# Basic auth username

basic_auth_user = ""

# Basic auth password

basic_auth_pass = ""

## Optional headers

# headers = ["X-From", "categraf", "X-Xyz", "abc"]

# timeout settings, unit: ms

timeout = 5000

dial_timeout = 3000

max_idle_conns_per_host = 100

# embeded prometheus agent mode

[prometheus]

# 是否启用 prometheus agent mode 功能

enable = false

# 可直接使用 prometheus 的 scrape yaml 文件,来描述抓取任务

scrape_config_file = "/path/to/in_cluster_scrape.yaml"

## log level, debug warn info error

log_level = "info"

## wal file storage path ,default ./data-agent

# wal_storage_path = "/path/to/storage"

## wal reserve time duration, default value is 2 hour

# wal_min_duration = 2

# 安装,自动添加system_service

./categraf --install

# 启动

systemctl start categraf.service

systemctl status categraf.service

# 看日志是否有写入数据报错

journalctl -f -u categraf.service

# 如果需要使用哪个插件,就在插件的配置文件添加target和采集规则

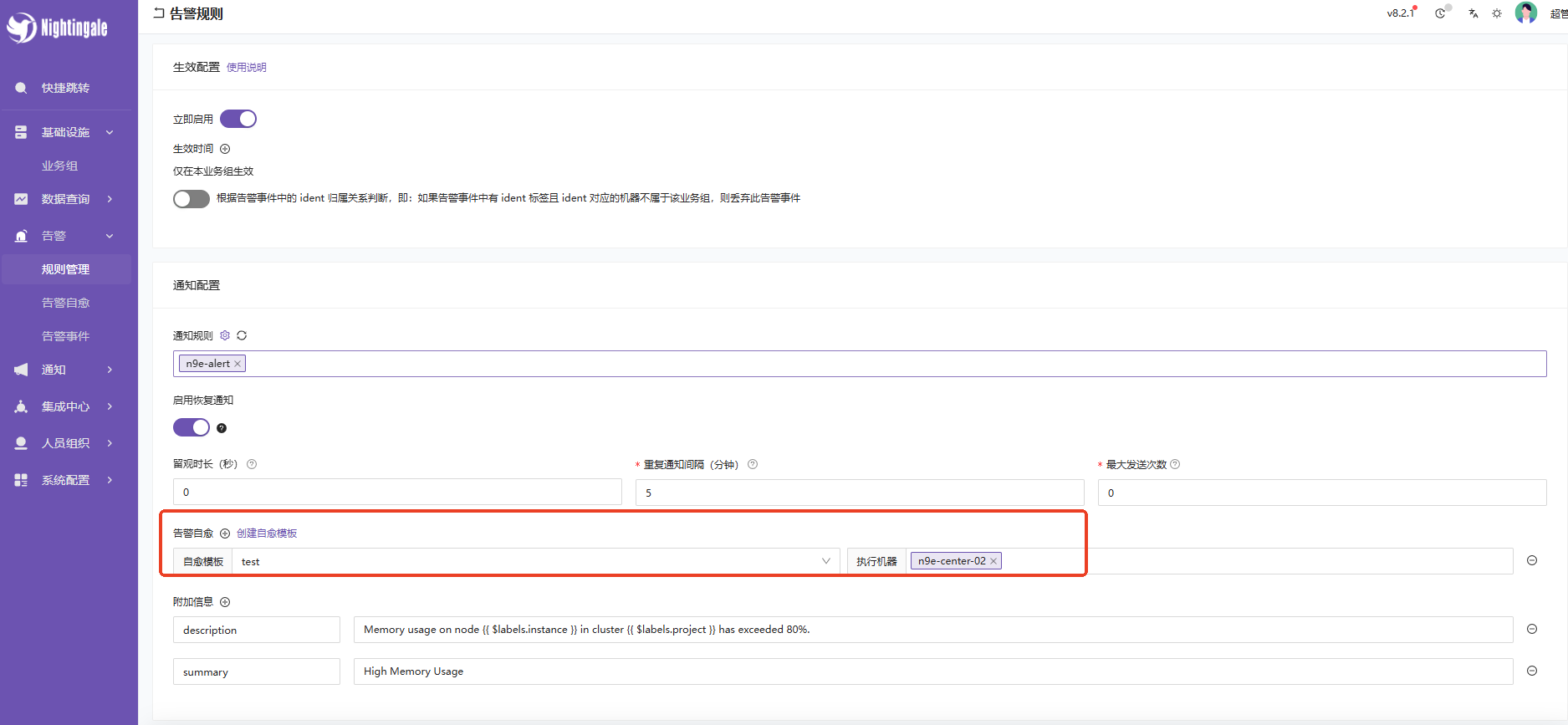

2.6 告警自愈功能配置

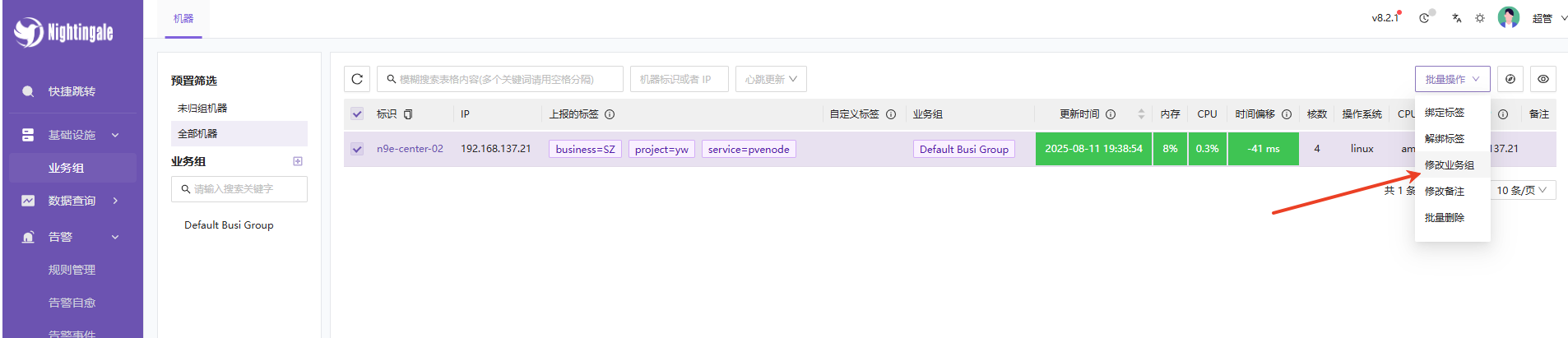

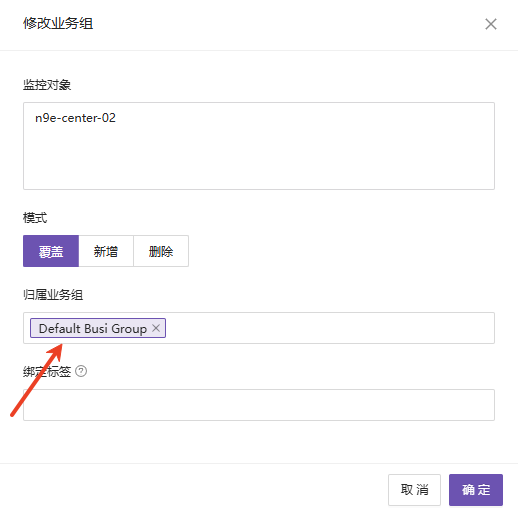

2.6.1 已部署categraf的机器才会自动注册到全部机器的列表,然后把机器加入告警规则的业务组

- 注意,一定要把机器加入对应业务组,否则告警自愈模板不会触发

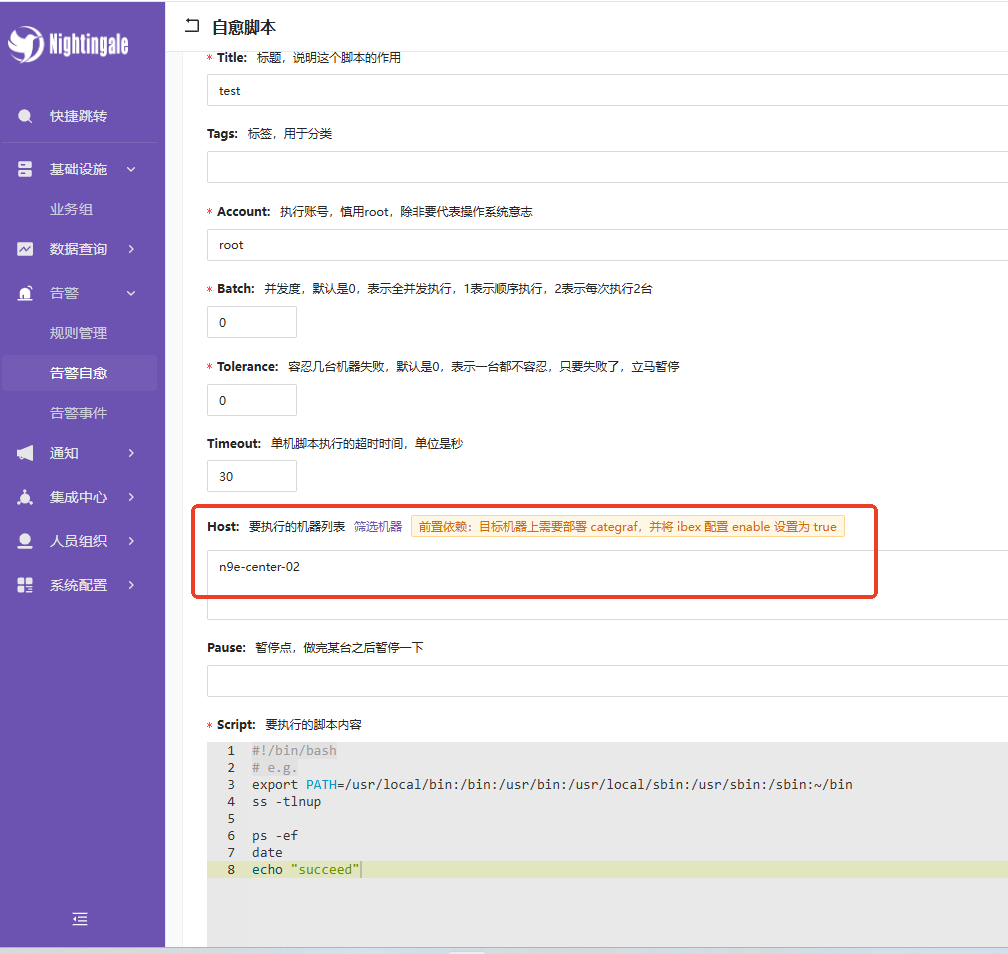

2.6.2 在对应业务组创建告警自愈的模板

- host一定要填机器的名字,IP是不通的

2.6.3 批量设置告警规则或设置单个规则,选择对应的自愈模板

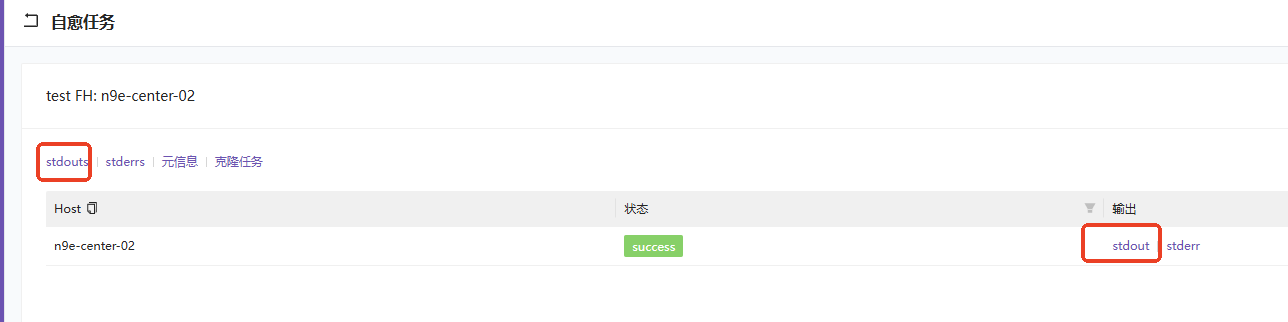

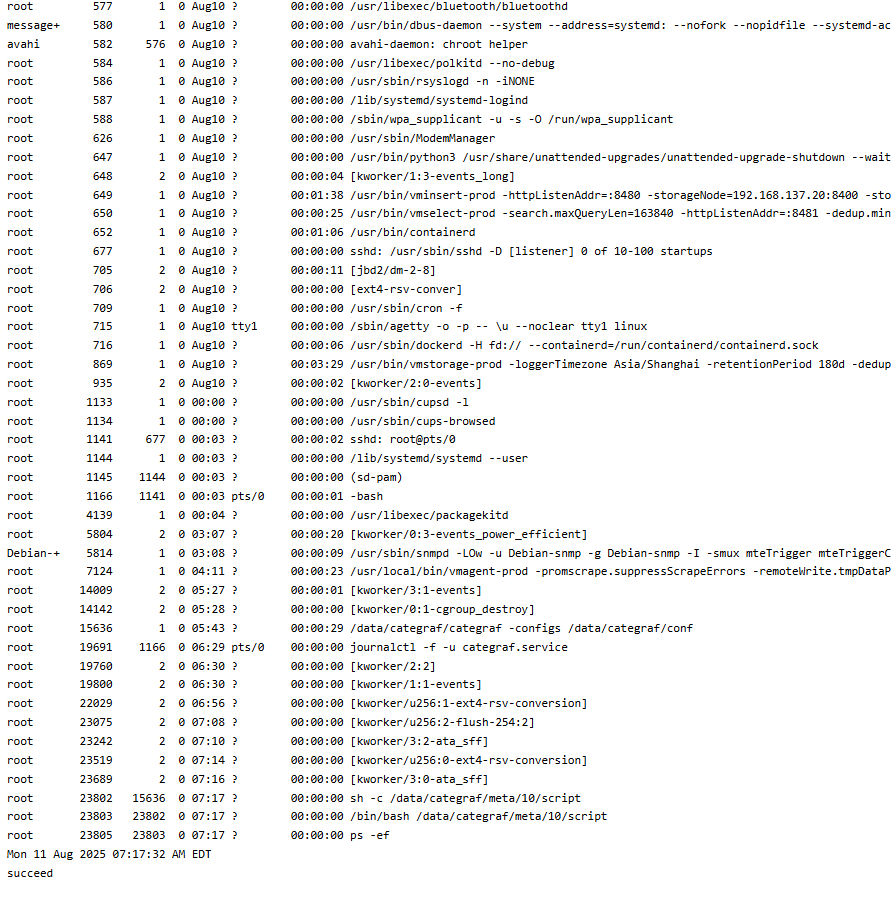

2.6.4 然后触发告警测试,观察告警自愈的历史任务是否有执行结果

2.6.5 选择历史任务的stdouts日志输出,查看流程是否执行成功,并且状态是否success

3. 部署边缘机房edge

- 依赖的组件:victoriametrics集群/Redis集群

- edge的pushgw.Writers的配置是本地边缘机房的victoria,存储的是告警元数据,并不是监控数据,但是与监控数据的victoria共用了同一套集群

# 必须要依赖redis,每个边缘机房都要独立的。这里方便模拟,只部署了单机

cat docker-compose.yml

version: '3.7'

networks:

n9e-edge:

driver: bridge

services:

redis:

image: "redis:6.2"

container_name: redis

hostname: redis

restart: always

environment:

TZ: Asia/Shanghai

networks:

- n9e-edge

ports:

- "6379:6379"

docker-compose up -d

# 部署单机victoriametrics,方便模拟

wget https://github.com/VictoriaMetrics/VictoriaMetrics/releases/download/v1.122.0/victoria-metrics-linux-amd64-v1.122.0.tar.gz

tar zxf victoria-metrics-linux-amd64-v1.122.0.tar.gz -C /usr/bin

cat > /etc/systemd/system/victoria-metrics.service <<'EOF'

[Unit]

Description=Linux VictoriaMetrics Server

Documentation=https://docs.victoriametrics.com/

After=network.target

[Service]

ExecStart=/usr/bin/victoria-metrics-prod -httpListenAddr=0.0.0.0:8428 -storageDataPath=/data/victoria-metrics -retentionPeriod=3

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable --now victoria-metrics.service

systemctl status victoria-metrics.service

# 部署edge组件,在官方n9e压缩包已经包括了

wget https://github.com/ccfos/nightingale/releases/download/v8.2.2/n9e-v8.2.2-linux-amd64.tar.gz

mkdir /data/n9e-edge/

tar zxf n9e-v8.2.2-linux-amd64.tar.gz -C /data/n9e-edge/

cd /data/n9e-edge

# 修改basicauthpass的token鉴权,与n9e配置文件内一致,开启apiforservice,修改日志级别,开启ibex,修改redis地址

# 同一个边缘机房多个edge的enginename一定要一致

vim etc/edge/edge.toml

[Global]

RunMode = "release"

[CenterApi]

# 改成n9e中心机房的ip地址。还需要修改basicauthpass的token鉴权,与n9e配置文件内一致

Addrs = ["http://192.168.137.20:17000"]

BasicAuthUser = "user001"

BasicAuthPass = "ccc26da7b9aba533cbb263sdklf902384"

# unit: ms

Timeout = 9000

[Log]

# log write dir

Dir = "logs"

# log level: DEBUG INFO WARNING ERROR

# 生产环境改为WARNING模式

Level = "DEBUG"

# stdout, stderr, file

Output = "stdout"

# # rotate by time

# KeepHours = 4

# # rotate by size

# RotateNum = 3

# # unit: MB

# RotateSize = 256

[HTTP]

# http listening address

Host = "0.0.0.0"

# http listening port

Port = 19000

# https cert file path

CertFile = ""

# https key file path

KeyFile = ""

# whether print access log

PrintAccessLog = false

# whether enable pprof

PProf = false

# expose prometheus /metrics?

ExposeMetrics = true

# http graceful shutdown timeout, unit: s

ShutdownTimeout = 30

# max content length: 64M

MaxContentLength = 67108864

# http server read timeout, unit: s

ReadTimeout = 20

# http server write timeout, unit: s

WriteTimeout = 40

# http server idle timeout, unit: s

IdleTimeout = 120

[HTTP.APIForAgent]

Enable = true

# [HTTP.APIForAgent.BasicAuth]

# user001 = "ccc26da7b9aba533cbb263a36c07dcc5"

# 一定要改为true,否则无法启动

[HTTP.APIForService]

Enable = true

[HTTP.APIForService.BasicAuth]

# 修改token鉴权,与n9e配置文件内一致

user001 = "ccc26da7b9aba533cbb263sdklf902384"

[Alert]

[Alert.Heartbeat]

# auto detect if blank

IP = ""

# unit ms

Interval = 1000

# 同一个机房一定要同一个名字

EngineName = "shenzhen-region"

# [Alert.Alerting]

# NotifyConcurrency = 10

[Pushgw]

# use target labels in database instead of in series

LabelRewrite = true

# # default busigroup key name

# BusiGroupLabelKey = "busigroup"

ForceUseServerTS = true

# [Pushgw.DebugSample]

# ident = "xx"

# __name__ = "xx"

# [Pushgw.WriterOpt]

# QueueMaxSize = 1000000

# QueuePopSize = 1000

[[Pushgw.Writers]]

# 这个是单机版的victoriametrics

Url = "http://192.168.137.23:8428/api/v1/write"

# Basic auth username

BasicAuthUser = ""

# Basic auth password

BasicAuthPass = ""

# timeout settings, unit: ms

Headers = ["X-From", "n9e"]

Timeout = 10000

DialTimeout = 3000

TLSHandshakeTimeout = 30000

ExpectContinueTimeout = 1000

IdleConnTimeout = 90000

# time duration, unit: ms

KeepAlive = 30000

MaxConnsPerHost = 0

MaxIdleConns = 100

MaxIdleConnsPerHost = 100

## Optional TLS Config

# UseTLS = false

# TLSCA = "/etc/n9e/ca.pem"

# TLSCert = "/etc/n9e/cert.pem"

# TLSKey = "/etc/n9e/key.pem"

# InsecureSkipVerify = false

# [[Writers.WriteRelabels]]

# Action = "replace"

# SourceLabels = ["__address__"]

# Regex = "([^:]+)(?::\\d+)?"

# Replacement = "$1:80"

# TargetLabel = "__address__"

# 开启为true,categraf的配置文件可以改为edge的IP地址

[Ibex]

Enable = true

RPCListen = "0.0.0.0:20090"

[Redis]

# address, ip:port or ip1:port,ip2:port for cluster and sentinel(SentinelAddrs)

# 修改为边缘机房本地的redis集群地址

Address = "127.0.0.1:6379"

# Username = ""

# Password = ""

# DB = 0

# UseTLS = false

# TLSMinVersion = "1.2"

# standalone cluster sentinel

RedisType = "standalone"

# Mastername for sentinel type

# MasterName = "mymaster"

# SentinelUsername = ""

# SentinelPassword = ""

cat > /etc/systemd/system/n9e-edge.service <<'EOF'

[Unit]

Description="n9e-edge"

After=network.target

[Service]

Type=simple

ExecStart=/data/n9e-edge/n9e-edge -configs /data/n9e-edge/etc/edge

WorkingDirectory=/data/n9e-edge/etc/edge

Restart=on-failure

SuccessExitStatus=0

LimitNOFILE=65536

StandardOutput=syslog

StandardError=syslog

SyslogIdentifier=n9e-edge

OOMScoreAdjust=-1000

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable --now n9e-edge.service

systemctl status n9e-edge.service

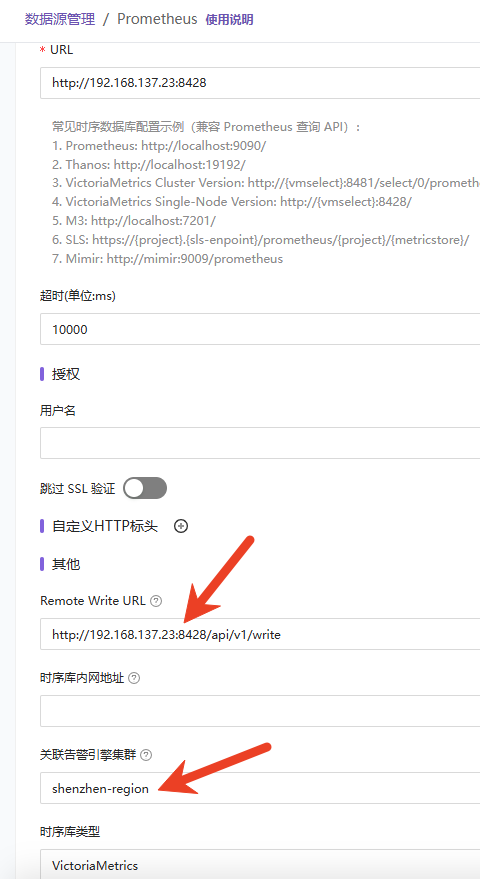

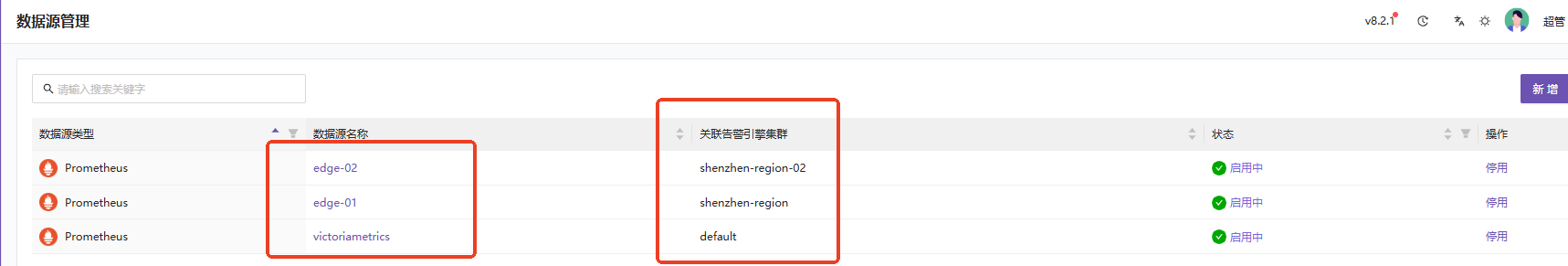

3.1 配置所有边缘机房的数据源,关联对应的告警引擎enginename

3.2 通过业务组来区分不同的采集器,例如exporter和telegraf

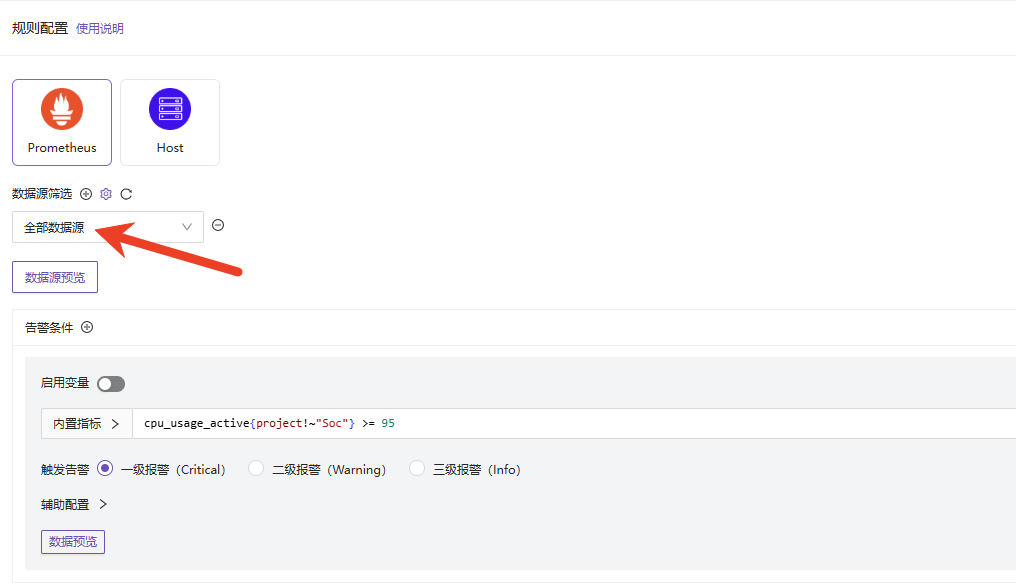

3.3 将告警规则关联所有边缘机房的数据源,这里精确匹配边缘的数据源来进行测试

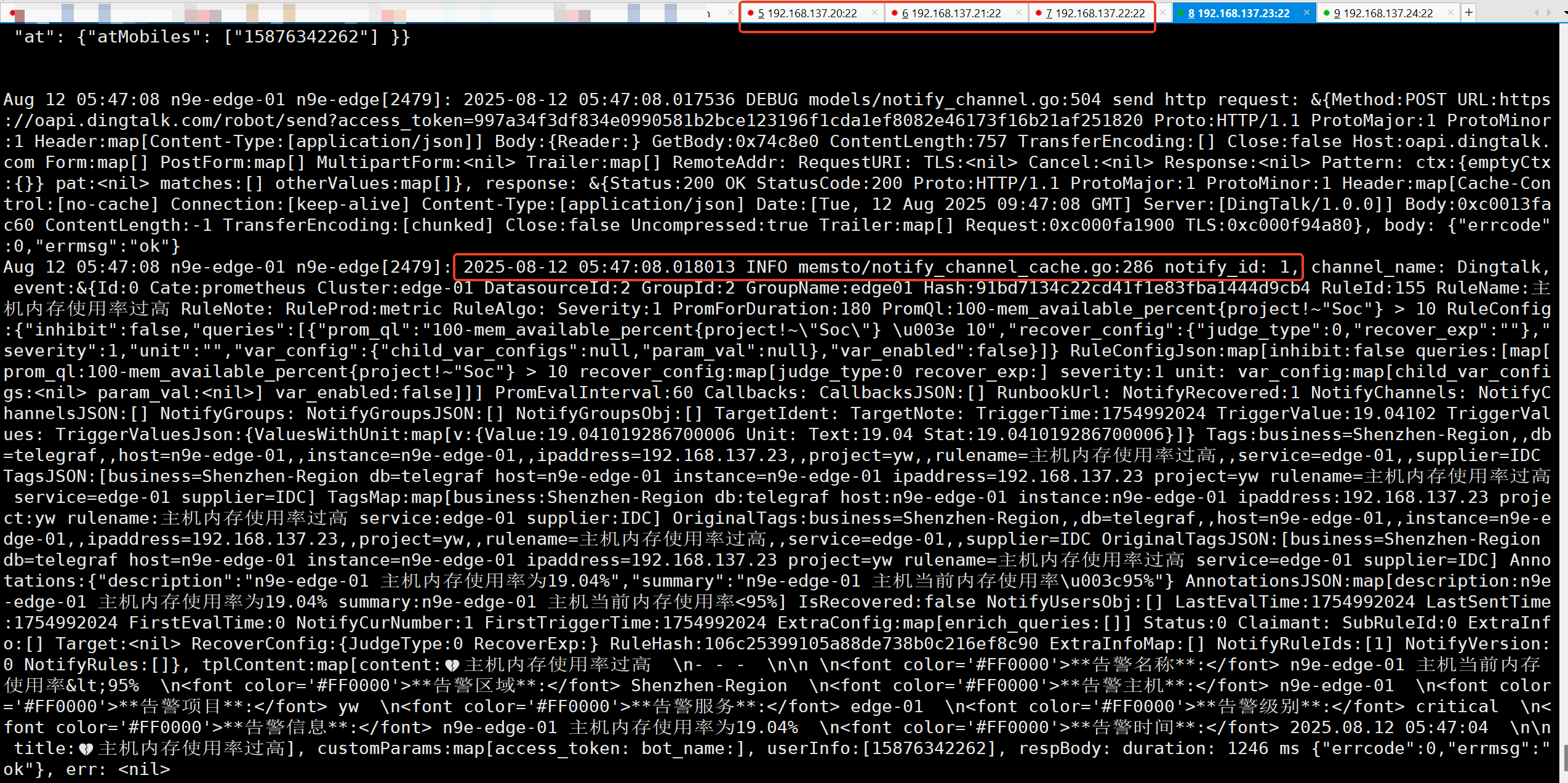

3.4 将告警阈值调低,让夜莺更新告警规则,并下沉到边缘机房edge

3.5 马上将中心机房的3台集群包括夜莺,直接断开电源模拟故障

3.6 然后观察edge的日志,验证边缘机房告警下沉是否能正常发出

- 中心机房故障,只保留边缘机房。告警规则由edge去本地边缘机房victoria查询,告警消息由edge发送

- 中心机房恢复后,edge是不会发送恢复告警的,因为此时中心机房的victoria已经恢复,由n9e夜莺接管了,重新改回正常触发阈值。除非不修改回正常的触发阈值,再等下一次由n9e触发的告警

4. 模拟2个边缘机房故障情况

4.1 先将所有告警规则批量改为匹配所有数据源,确保中心机房+所有边缘机房都关联起来

4.2 将其中1个告警规则的阈值调低,然后马上将2个边缘机房断电模拟故障

- 告警规则阈值调低后,边缘的vmagent会推送数据到中心机房,因此告警会从中心机房的监控数据中获取并触发

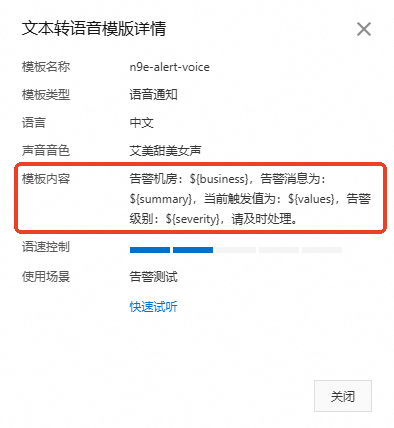

5. 配置电话告警

5.1 先去阿里云创建语音通知模板

模板内容

告警机房:${business},告警消息为:${summary},当前触发值为:${values},告警级别:${severity},请及时处理。

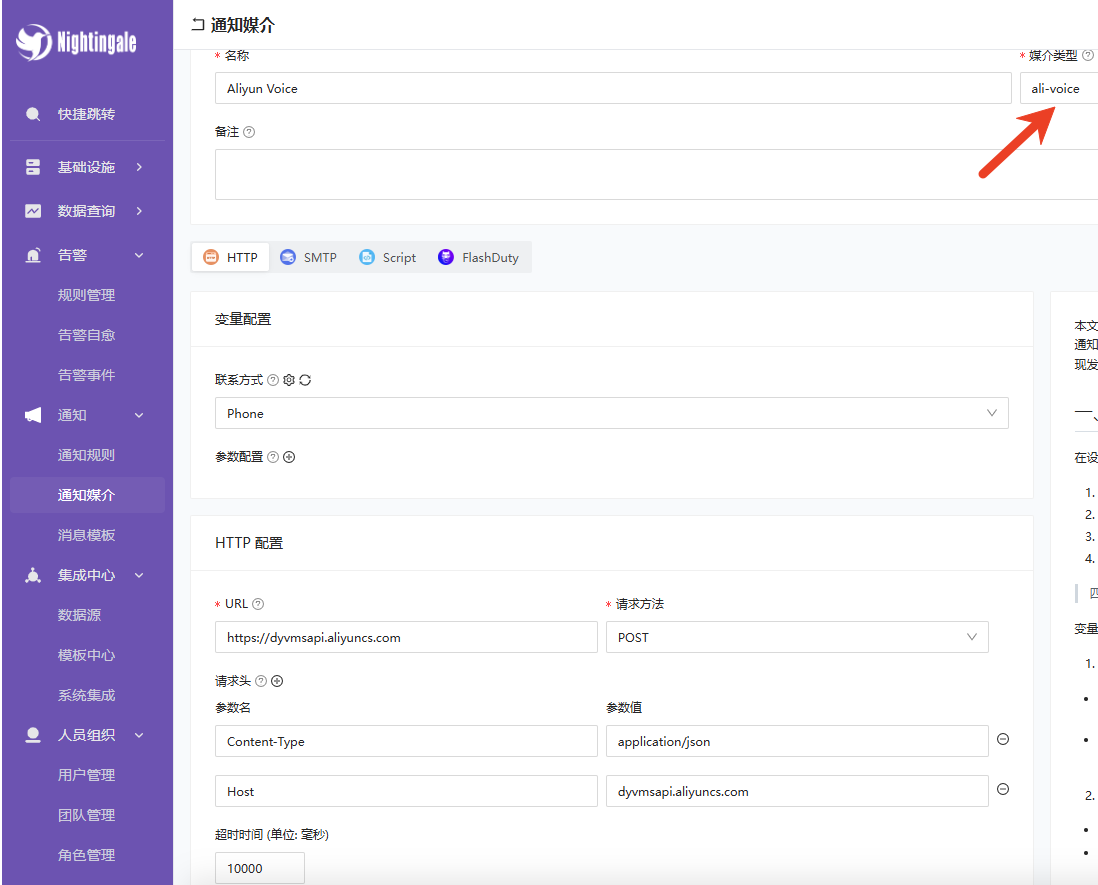

5.2 夜莺创建Aliyun_Voice通知媒介

5.3 请求参数填写阿里云的通知模板TtsCode和Number等信息

AccessKeyId=LTAI5tQhsjxxxxx

AccessKeySecret=30cFbeMS4xxxx

CalledNumber={{ $sendto }}

CalledShowNumber=阿里云的电话

TtsCode=模板code

TtsParam={"business":"{{$tpl.business}}","summary":"{{$tpl.summary}}","values":"{{$tpl.values}}","severity":"{{$tpl.severity}}"}

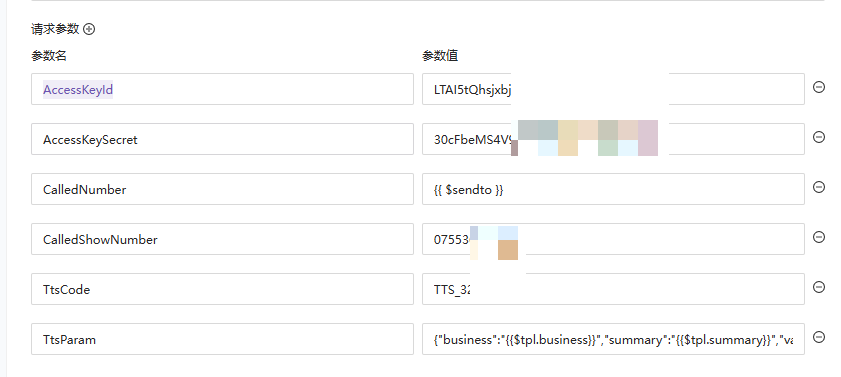

5.4 配置消息模板,选择Aliyun Voice的模板进行修改

# 字段标识必须与上面的{{$tpl.business}},模板key一致

business={{$event.TagsMap.business}}

severity={{if eq $event.Severity 1}}critical{{else if eq $event.Severity 2}}warning{{else}}info{{end}}

summary={{$event.AnnotationsJSON.summary}}

values={{$event.TriggerValue}}

5.5 团队添加对应的人员,通知规则选择接收团队

5.6 测试电话告警,将通知规则添加对应的告警通道